加密通常是安全方面的主题,但对隐私权也很重要。加密的目标是防止他人读取加密信息……但防止他人读取您的信息是保护其隐私的一种方法。用户通常对其自身可以执行的操作有所限制,但在您作为他们正在使用的服务的提供商的协助下,加密可以帮助他们保护自己的数据。

有三种相关的方法可以应用加密来帮助用户保护隐私:传输中加密、静态加密和端到端加密

- 传输中加密是指保持用户和您的网站之间的数据加密:即 HTTPS。您可能已经为您的网站设置了 HTTPS,但您确定所有传输到您网站的数据都已加密吗?这就是重定向和 HSTS 的用途,它们在下面进行了描述,并且应该是您的 HTTPS 设置的一部分。

- 静态加密是对存储在您的服务器上的数据进行加密。这可以防止数据泄露,并且是您安全态势的重要组成部分。

- 端到端加密是在客户端对数据进行加密,然后再到达您的服务器。这甚至可以保护用户数据免受您的侵害:您可以存储用户的数据,但您无法读取它。这很难实现,并且不适合所有类型的应用程序,但它是用户隐私的有力帮助,因为除了用户自己之外,任何人都看不到他们的数据。

HTTPS

第一步是通过 HTTPS 提供您的 Web 服务。您很可能已经完成了此操作,但如果还没有,这是一个重要的步骤。HTTPS 是 HTTP,浏览器用于从服务器请求网页的协议,但使用 SSL 进行加密。这意味着外部攻击者无法读取或干扰发送者(您的用户)和接收者(您)之间的 HTTPS 请求,因为它已加密,因此他们无法读取或更改它。这就是传输中加密:当数据从用户移动到您,或从您移动到用户时。HTTPS 传输中加密还可以防止用户的 ISP 或他们正在使用的 Wi-Fi 提供商读取他们作为与您的服务关系的一部分发送给您的数据。它也可能会影响您服务的功能:许多现有 JavaScript API 的使用都要求网站通过 HTTPS 提供。MDN 提供了更全面的列表,但安全上下文后面的 API 网关包括 Service Worker、推送通知、Web Share 和 Web Crypto 以及一些设备 API。

要通过 HTTPS 提供您的网站,您需要 SSL 证书。这些证书可以通过 Let's Encrypt 免费创建,或者如果您正在使用托管服务,通常可以由您的托管服务提供商提供。也可以使用第三方服务“代理”您的 Web 服务并提供 HTTPS 以及缓存和 CDN 服务。此类服务的示例有很多,例如 Cloudflare 和 Fastly—具体使用哪种服务取决于您当前的 инфраструктура。过去,HTTPS 的实施可能很麻烦或很昂贵,这就是为什么它往往仅在付款页面或特别安全的来源上使用;但免费提供的证书、标准改进和浏览器的大规模普及消除了所有这些障碍。

操作

- 在您的服务器上为所有内容启用 HTTPS(无论您选择哪种方法)。

- 考虑在您的服务器前面使用代理,例如 Cloudflare(httpsiseasy.com/ 解释了该过程)。

- Let's Encrypt 将引导您完成创建您自己的 Let's Encrypt SSL 证书的过程。

- 或者直接使用 OpenSSL 创建您自己的证书,并让您选择的证书颁发机构 (CA) 对其进行签名(启用 HTTPS 详细解释了如何执行此操作)。

您选择哪种方法取决于业务权衡。让第三方为您管理 SSL 连接是最容易设置的,并且确实带来了其他好处,例如负载均衡、缓存和分析。但这也会导致明显地将某些控制权让渡给该第三方,以及不可避免地依赖于他们的服务(以及可能的付款,具体取决于您使用的服务和您的流量级别)。

生成证书并让 CA 对其进行签名是过去 SSL 流程的执行方式,但如果您的提供商支持 Let's Encrypt,或者您的服务器团队在技术上足够熟练,并且它是免费的,则使用 Let's Encrypt 可能会更容易。如果您使用的服务级别高于云托管,您的提供商通常也会提供 SSL 作为一项服务,因此值得检查一下。

原因

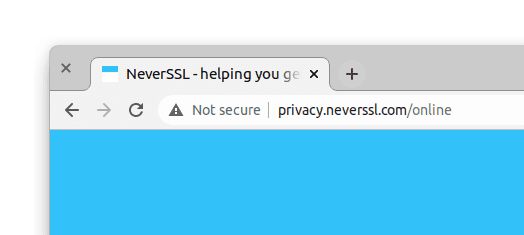

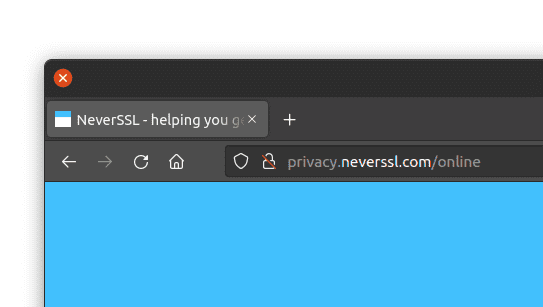

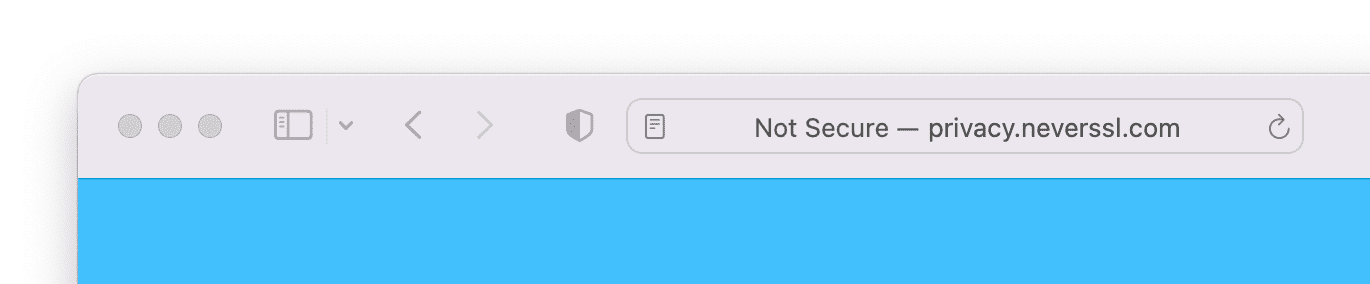

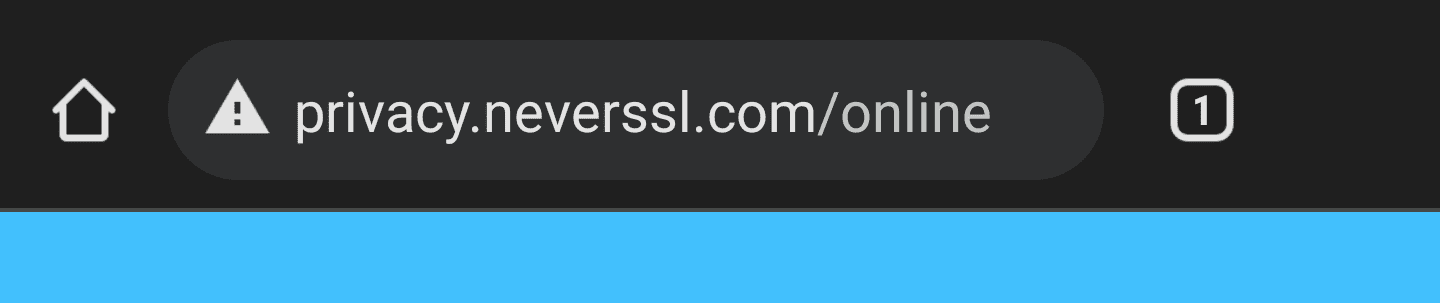

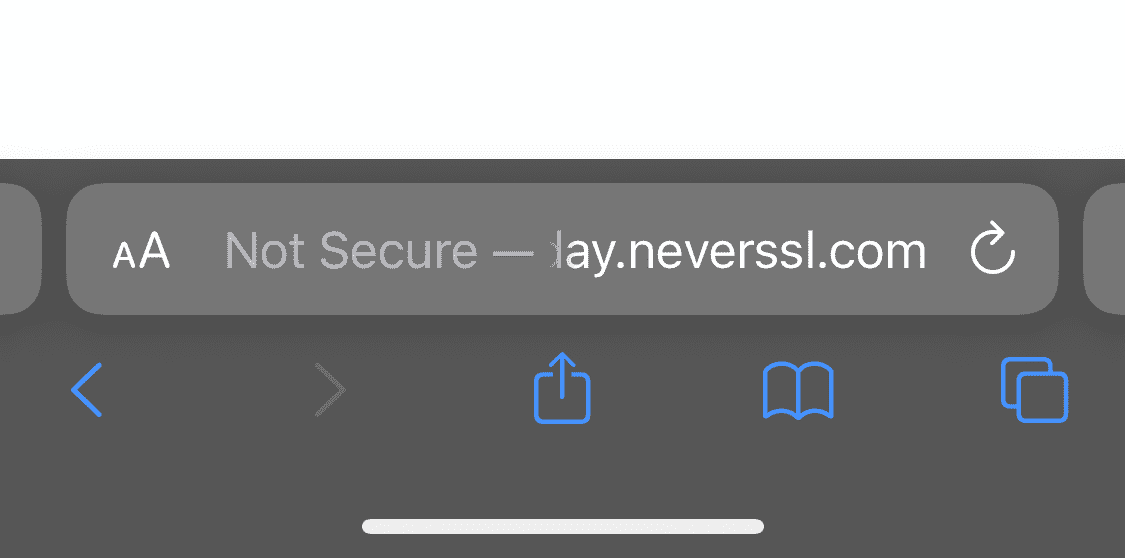

安全性是您隐私权故事的一部分:能够证明您可以保护用户数据免受干扰有助于建立信任。如果您不使用 HTTPS,您的网站也会被浏览器标记为“不安全”(并且已经有一段时间了)。现有的 JavaScript API 通常仅适用于 HTTPS 页面(“安全来源”)。它还可以保护您的用户免受他们的 Web 使用情况被他们的 ISP 看到。这当然是一个最佳实践;现在对于网站来说,几乎没有理由不使用 HTTPS。

浏览器如何呈现 HTTP(不安全)页面

重定向到 HTTPS

如果您的网站在 http: 和 https: URL 上都可用,您应该将所有 http URL 访问重定向到 https。这是出于上述原因,并且还可以确保您的网站在 whynohttps.com 流行时不会出现在该网站上。如何执行此操作在很大程度上取决于您的基础设施。如果您托管在 AWS 上,您可以使用 经典或 应用程序负载均衡器。Google Cloud 类似。在 Azure 中,您可以创建一个 Front Door;在带有 Express 的 Node 中,检查 request.secure;在 Nginx 中,捕获所有端口 80 并返回 301;在 Apache 中,使用 RewriteRule。如果您正在使用托管服务,则他们很可能会自动为您处理重定向到 HTTPS URL:Netlify、Firebase 和 GitHub Pages 等许多服务都这样做。

HSTS

HSTS 是“HTTP Strict-Transport-Security”的缩写,是一种将浏览器锁定为永久为您的服务使用 HTTPS 的方法。一旦您对迁移到 HTTPS 感到满意,或者如果您已经这样做了,那么您可以将 Strict-Transport-Security HTTP 响应标头添加到您的传出响应中。以前访问过您的网站的浏览器将记录已看到此标头,并且从那时起,即使您请求 HTTP,也会自动以 HTTPS 访问此网站。这避免了像上面那样进行重定向:就好像浏览器默默地将对您服务的所有请求“升级”为使用 HTTPS。

同样,您可以提供 Upgrade-Insecure-Requests 标头以及您的页面。这与 Strict-Transport-Security 有所不同但相关。如果您添加 Upgrade-Insecure-Requests: 1,那么从此页面到其他资源(图片、脚本)的请求将以 https 形式请求,即使链接是 http。但是,浏览器不会以 https 形式重新请求页面本身,并且浏览器不会记住下次。实际上,如果您要转换具有大量链接到 HTTPS 的现有站点,并且转换内容中的链接 URL 很困难,则 Upgrade-Insecure-Requests 非常有用,但最好在可能的情况下更改内容。

HSTS 主要是一个安全功能:它将您的网站“锁定”为对以前访问过该网站的用户的 HTTPS。但是,如上所述,HTTPS 对隐私权有利,而 HSTS 对 HTTPS 有利。同样,如果您要更新所有内容,则实际上不需要 Upgrade-Insecure-Requests,但它是一种有用的“双保险”方法,可在深度防御中添加,以确保您的网站始终为 HTTPS。

操作

将 HSTS 标头添加到您的传出响应中

Strict-Transport-Security: max-age=300; includeSubDomains

max-age 参数决定了浏览器应记住和强制执行 HTTPS 升级的时间长度(以秒为单位)。(在这里,我们将其设置为 300 秒,即五分钟。)最终,您希望将其设置为 6,3072,000,即两年,这是 hstspreload.org 推荐的数字,但如果出现问题,则很难恢复。因此,建议您首先将此数字设置为较低的数字 (300),进行测试以确认没有任何问题,然后分阶段增加该数字。

将 Upgrade-Insecure-Requests 标头添加到您的传出响应中

Upgrade-Insecure-Requests: 1 Content-Security-Policy: upgrade-insecure-requests

端到端加密

保持用户数据私密的良好方法是不向用户以外的任何人显示数据,包括您自己。这对于您的信任立场非常有帮助:如果您没有用户的

How does it work?

This approach is frequently used by messaging applications, where it's referred to as "end-to-end encryption", or "e2e". In this way, two people who know one another's keys can encrypt and decrypt their messages back and forth, and send those messages via the messaging provider, but the messaging provider (who does not have those keys) can't read the messages. Most applications are not messaging apps, but it is possible to combine the two approaches—a solely client-side data store, and data encryption with a key known to the client—to store data locally but also send it encrypted to the server. It is important to realise that there are limitations to this approach: this isn't possible for all services, and in particular it can't be used if you, as the service provider, need access to what the user is storing. As described in part 2 of this series, it is best to obey the principle of data minimisation; avoid collecting data at all if you can. If the user needs data storage, but you do not need access to that data to provide the service, then end-to-end encryption is a useful alternative. If you provide services which require being able to see what the user stores to provide the service, then end-to-end encryption is not suitable. But if you do not, then you can have the client-side JavaScript of your web service encrypt any data it sends to the server, and decrypt any data it receives.

An example: Excalidraw

Excalidraw does this and explains how in a blog post. It is a vector drawing app that stores drawings on the server, which are encrypted with a randomly chosen key. Part of the reason that Excalidraw can implement this end-to-end encryption with relatively little code is that cryptographic libraries are now built into the browser with window.crypto, which is a set of JavaScript APIs supported in all modern browsers. Cryptography is hard and implementing the algorithms comes with many edge cases. Having the browser do the heavy lifting here makes encryption more accessible to web developers and therefore makes it easier to implement privacy via encrypted data. As Excalidraw describes in their writeup, the encryption key remains on the client-side, because it's part of the URL fragment: when a browser visits a URL https://example.com/path?param=1#fraghere, the path of the URL (/path) and the parameters (param=1) are passed to the server (example.com), but the fragment (fraghere) is not, and so the server never sees it. This means that even if the encrypted data goes through the server, the encryption key does not and thus privacy is preserved because the data is end-to-end encrypted.

Limitations

This approach to encrypting user data is not foolproof. It contributes to your trust stance for your users but it cannot fully replace it. Your users will still have to trust your service, because you could, at any moment, swap out client-side JavaScript for some subtly similar JavaScript that does not impenetrably encrypt data; and although it is possible as a user to detect whether a website you're using has done that, it's extremely difficult to do so. In practice, your users will still need to trust that you will not deliberately read and abuse their data while promising to not do so. However, demonstrating that data is encrypted and not readable by you as a (not malicious) service provider can contribute a lot to demonstrating why you are trustworthy.

It's also important to remember that one of the goals of end-to-end encryption is to stop you, the site owner, from being able to read the data. This is good for privacy, but it also means that if there are problems, you can't help. In essence, a service using end-to-end encryption puts the user in charge of the encryption keys. (This may not be obvious or overt, but someone has to have the key, and if data is kept private from you, then that's not you.) If those keys are lost, then there will be nothing you can do to help, and probably any data encrypted with those keys may also be lost. There is a fine balancing act here between privacy and usability: keep data private from everybody using encryption, but also avoid forcing users into having to be cryptology experts who manage their own keys in a secure manner.

Encryption at rest

As well as encrypting your users' data in transit, it's also important to consider encrypting data that you have stored on the server. This helps to protect against data breaches, because anyone who obtains unauthorised access to your stored data will have encrypted data, which they will hopefully not have the keys to decrypt. There are two different and complementary approaches to encrypting data at rest: encryption that you add, and encryption that your cloud storage provider adds (if you're using a cloud storage provider). The storage provider encryption doesn't provide much protection against data breaches via your software (because storage provider encryption is usually transparent to you as a user of their service), but it does help against breaches that happen at the provider's infrastructure. It's often simple to turn on and so is worth considering. This field changes rapidly and your security team (or security-savvy engineers on your team) are the best to advise on it, but all cloud storage providers offer encryption at rest for block storage Amazon S3 by setting, Azure Storage, and Google Cloud Storage by default, and for database data storage AWS RDS, Azure SQL, Google Cloud SQL among others. Check this out with your cloud storage provider, if you're using one. Handling encryption of data at rest yourself to help protect user data from data breaches is more difficult, because the logistics of securely managing encryption keys and making them available to code without also making them available to attackers is challenging. This isn't the best place to advise on security issues at that level; talk with your security-savvy engineers or dedicated team about this, or external security agencies.